What we can learn from Australia 2019 pre-election opinion polling failure

One thing that the result of the Australian election in 2019 demonstrated is not all research methods are equal. None of the major opinion polls – Newspoll, Ipsos, Galaxy or Essential polls predicted a coalition win on a two-party preferred basis. People are now questioning what went wrong with the opinion polls as LNP returns to power in what looks like a majority government. Unless a significant number of voters changed their mind on the day (which is unlikely), questions are now being asked why the pre-election polls were so wrong. I have several theories all related to the research methods used and I think there are several lessons that apply to anyone conducting customer research.

Quantitative research methods

Election opinion polling typically use quantitative methods which involve data in numerical form.

In the field of UX, quantitative methods typically include surveys, Multivariate or A/B testing, web analytics and remote unmoderated usability testing.

Benefits:

-

Statistically more likely to be representative of population due to larger sample size

-

Generally more cost effective, especially if automated

-

May be easier to analyse and collate data

Drawbacks:

Apparently following the election the Association of Market and Social Research Organisations (AMSRO) plans to conduct a review of political polling methods in Australia to understand why polling companies got it so wrong. The results of this will take time but if I had to fathom a guess; here are some of the reasons and they have to do with some of the drawbacks and issues of quantitative research.

-

Robot automated surveying is not always accurate - According to The Guardian “This problem is most pronounced in the so-called “robo-polls”, where a recorded message is dialled to an entire electorate, where a much smaller number of usually older voters punch in the numbers and then the results are weighted through algorithms that ensure results are “robust”."

-

Finding a statistically representative sample can be challenging – According to The Guardian “All polls seek to reach a representative sample, that is one that generally reflects the broader population on age, gender, income and geography. But this is getting more challenging with traditional phone polls hampered by the reduction in the use of fixed landlines, particularly among younger voters. Not only are people harder to reach but they are also less likely to respond to surveys. Refusal rates are increasing to all forms of contact, and the lower the overall response rate, the more likely the sample is to be skewed.”

-

Poor question design and/or handling of resultant responses can lead to misleading results – My husband answered a phone poll asking something like “Which party would you choose on a two party preferred basis?” He said neither! If 5-10% of the population said “neither” (which wasn’t an allowable response) how do you treat this data? While it may be easier to clean and remove this data it is probably those responses which changed the outcome of the election and significantly contributed to incorrect predictions.

-

Not good for actually understanding opinions as it doesn’t give any indication of ‘Why?’ Post the Australian 2019 federal election, everyone is trying to understand “why” the ALP lost. The quantitative opinion polls don’t provide any insight into this.

Qualitative research methods

Qualitative research methods typically involve research where the data are not in the form of numbers. In the field of UX, methods typically include contextual interviews, focus groups, ethnography and moderated usability testing.

Benefits:

-

Qualitative research is exploratory and seeks to understand the ‘how’ and ‘why’. It provides deeper, richer insights into user attitudes, motivations and issues.

-

Greater opportunity to build rapport with research participant and get to the truth.

-

Can provide richer insights into the problem – this can help to form hypotheses for potential quantitative research.

Drawbacks:

-

Can be more time consuming and expensive (but not always)- to conduct 30 contextual interviews across Australia for instance can be time consuming to coordinate and conduct as well as expensive both in terms of participant incentives and researcher resources.

-

Potential geographic constraints – it is more difficult to sample participants around the country or globe if conducting face-to-face sessions.

-

Data analysis can be more difficult and time consuming – difficult to automate data from open questions, especially if research was exploratory and unstructured.

-

Participants may sometimes lie – sometimes participants may lie, fake good or refrain from giving out too much information if they have concerns about being judged by fellow participants or the researcher (which can be true for quantitative research as well).

What methods should UX practitioners use?

When designing customer research you need to be clear on the research objectives. According to Jane Abao, research objectives are different to research questions. Jane states “research objectives are statements of intention or actions intended”. Your objectives will help you define your research methodology. What you need to determine is whether you need to utilise quantitative or qualitative research methods or a combination of both.

You also need to consider if you utilise opinion based methods which focus on what people say versus behavioural based methods which focus on what people do. Our preference when designing user research is to try to generally focus on what people actually do (which occasionally conflicts with what people say). As Shane Ketterman states, that is why we don't listen to customers who tell us what they want and why good user research really matters.

Validity and reliability are the two traditional goals you seek in your research to ensure that it is indeed ‘rigorous’. By using a mix of research methods you can achieve this by triangulating your data which we have previously written about Designing a robust user research approach.

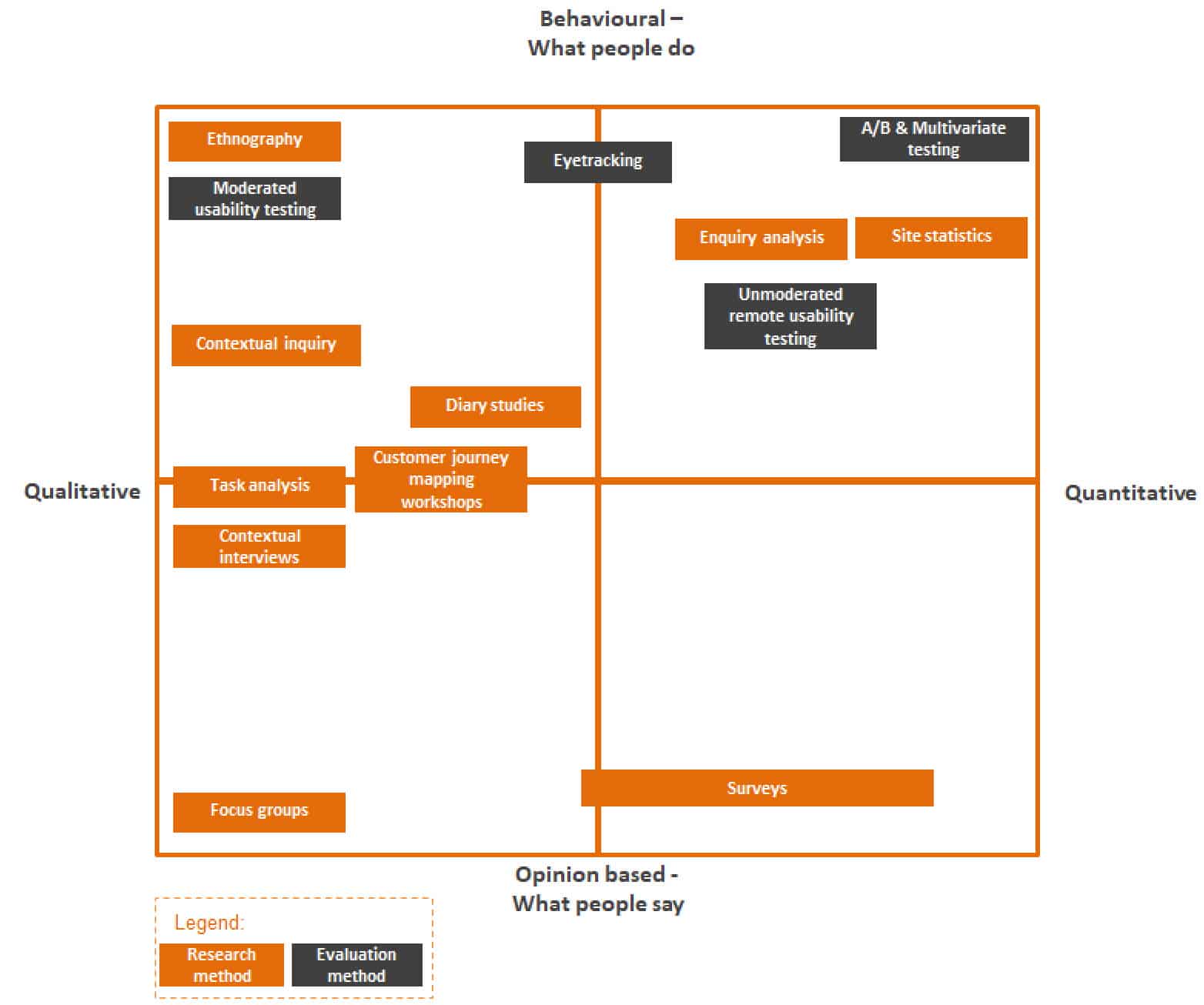

To help you plan your research approach we have created the following diagram which approximately shows where each UX method sits:

Figure: Comparing UX research methods

So, what can we learn about the Australian 2019 pre-election poll failure?

Here are my thoughts on how to avoid the poll 'flop' and to increase the rigour of a research:

-

Be clear on your research objectives and design the right research approach to answer those objectives.

-

If you want to know ‘how’ and ‘why’ you need to use qualitative methods.

-

Regardless of which research method you use, a representative sample is critical to give you valid and reliable data you are confident in.

-

Conducting and analysing research using automated technologies still has a way to go. The help of AI in the future might change this, but we are just not there yet.

-

Always consider using a mix of qualitative and quantitative research methods and triangulate your data.

-

Participants lie! As I recently presented at the Design Research conference, design your research to encourage users to tell the truth!

Following the above tips can really make a difference to the rigour of your research study. Because afterall, obtaining accurate, reliable and valid data is what researchers should strive for.

How can you learn more?

If you are interested in learning more about each of these different research methods and how and when to design your research approach, consider our upcoming 3 day UX course and certification course or register your interest for our UX Accelerator course. In all of our UX training we cover different quantitative and qualitative research methods including effective survey design. We also spend significant time on how to design your research approach depending on your target audience and how to conduct different qualitative UX methods.