As UX researchers, whether we are working on some early exploratory user research, or working on a quick and ‘lean’ usability testing project, due diligence in analysis is a necessary part of our trade. But have you ever experienced ‘paralysis by analysis’ or felt overwhelmed by the amount of data collected? You may have just spent a month conducting contextual enquiries with your user base, and now face the seemingly impossible task of pulling it all together. Or you may be working in an Agile / Lean UX environment and have just finished 4-6 test sessions and now need to quickly work out what the key insights are – due tomorrow. How do you get a handle on all this information and distil it into some meaningful insights?

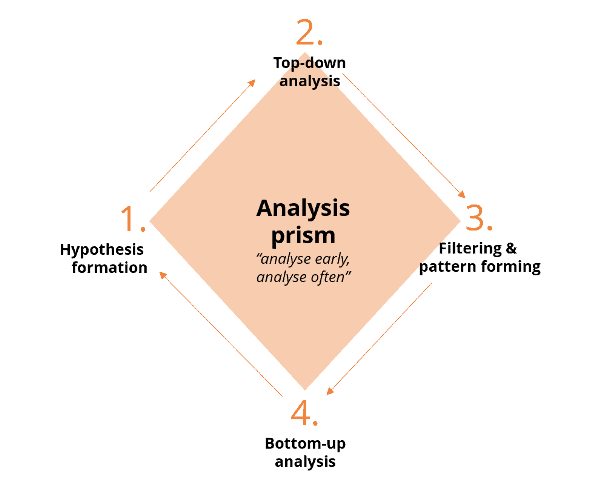

Analysis prism

There are multiple, well-documented analysis methods available. In the academic world, qualitative research in particular has formalised methods for analysis (such as grounded theory analysis) and tools available to assist (such as NVivo). However, the goals of commercial research, especially in ‘Lean UX’ environments, together with budget and time restrictions, demand a different approach to analysis. The analysis approach needs to allow key insights to be distilled while still being grounded in evidence. We have developed an ‘Analysis prism’ that summarises these key principles, blending a range of analysis approaches, to ensure insights are relevant, actionable, and defensible.

The overlying lens through which to view the process is ‘analyse early, analyse often’. In other words, don’t leave it to the last interview to start the analysis process. It’s good practice, especially for qualitative research, to start writing down ‘emerging themes’ from the first interview session. Let’s say you have 12 user interviews to conduct. Revisit your research objectives after each session, and write down top-of-mind thoughts as to how this interview answered these objectives. Write down any interesting thoughts, ideas or opportunities, for you to revisit later in analysis after all the interviews are conducted.

There are four corners to our prism and the first is to develop hypotheses prior to commencing the research. As acknowledged in Jeff Gothelfs and Josh Seiden’s Lean UX book, whether we like to admit or not, many designs are made on assumptions about the user. Developing hypotheses early on is useful to make explicit these assumptions, and therefore to allow explicit monitoring of these in user observations. It also improves the overall rigour of the research process. A few tips in developing hypotheses:

- They should be represented in declarative form for testing purposes, to clearly confirm or disprove the outcome e.g. 'all cats are grey';

- Although they are written like findings, be very explicit in your analysis notes or spreadsheet, that these are your hypotheses and need to be evaluated throughout and at the end of the research to confirm if they were valid.

We undertook this exercise recently with a client where we explicitly laid out ‘what we thought we would find’ in terms of both user’s decision-making and usability issues they would have with the tool being tested. It was fascinating and helpful to go back at the end of the project to see how far our research had brought us – we found almost half of the hypotheses we thought we would find turned out to be incorrect. As such, it’s also a great tool to demonstrate how important the research was to conduct in the first place! It also helps us challenge many of the assumptions that drive our behaviours and decisions.

Our next ‘corner’ is to do some ‘top-down’ analysis. During the research, you can write down your top of mind thoughts and what you think are emerging themes after each interview or testing session. It is also useful to have a ‘post-research analysis’ session with the project team members immediately after the research has been conducted. This session should be structured around the key research objectives, and refer back to your original hypotheses. Aim for a top-of-mind free flowing discussion around what each team member found. This process allows top themes and insights to bubble up and can be facilitated by using a post-it note or index card sort, writing down what you found, then grouping together in key themes. You will have enthusiastic moments of 'oh yes, I found that too’ and doubtful moments of ‘I didn’t get that, I’ll have to check my notes’. Appoint someone to be in charge of synthesising the output of this session.

The third corner of our prism is to put all of this through a filtering and pattern forming process. The filtering process should come back to the research objectives and say: ‘yes this observation or insight was interesting, but is it relevant to our objectives?’ The pattern forming process allows you to get creative and apply your conceptual thinking skills, and identify relationships within the insights and themes. You can use existing models of user behaviour as a ‘coat hanger’ to hang your insights on, or generate a new model illustrating visually the relationships you’ve found within the data. For example, for a recent client where we were researching decision-making we used an existing model (four stages of competence) to demonstrate differing levels of understanding we found in the research, and then also modelled the key insights within a decision-making attitudinal inputs and behavioural outputs funnel. Think infographics (more commonly used for modelling figures – aka quantitative research) fuelled with rich qualitative research insights, relationships and patterns, drawing on existing models and theories as relevant.

The final principle is ‘bottom-up’ analysis. You’ve done all your ‘thinking’ about what you think you’ve found, but now you need to go back, check your notes and/or transcripts and ensure you have not missed anything. This requires discipline and patience, but you owe it to the research process to be thorough to ensure that you have not missed key themes, issues or insights based on your own ‘bias’. If you just rely on ‘top-down’ analysis you are at risk of biasing your research with the Peak-end rule. That is, you remember the ‘peak’ participant who could articulate something well, and the last couple of interviews you did. Due diligence and inclusiveness of all participant’s data in analysis is critical in ensuring the results you end up with are accurate and defensible.

While we have numbered, these principles implying a sequence – there is an ebb and flow to analysis. Sometimes you need to go back to your data (‘bottom-up’ analysis) and then come back up for ‘air’ and look at the big picture again (‘top-down’ analysis), and repeat this multiple times throughout the analysis process before you’ve ‘cracked’ that insight.

In summary

- Good analysis should be an integral part of the research process to ensure key insights are distilled, whilst still being grounded in evidence;

- Our ‘Analysis prism’ summarises the key principles required for rigorous analysis, ultimately advocating for ‘analyse early, analyse often’, and applying due diligence and conceptual thinking in analysis.

Tip

Combine best practice in analysis with the research practice of triangulation to add rigour to your research approach.